I am pretty sure you have already read or heard about scenarios where self-driving cars have to make decisions in life threatening situations, right?

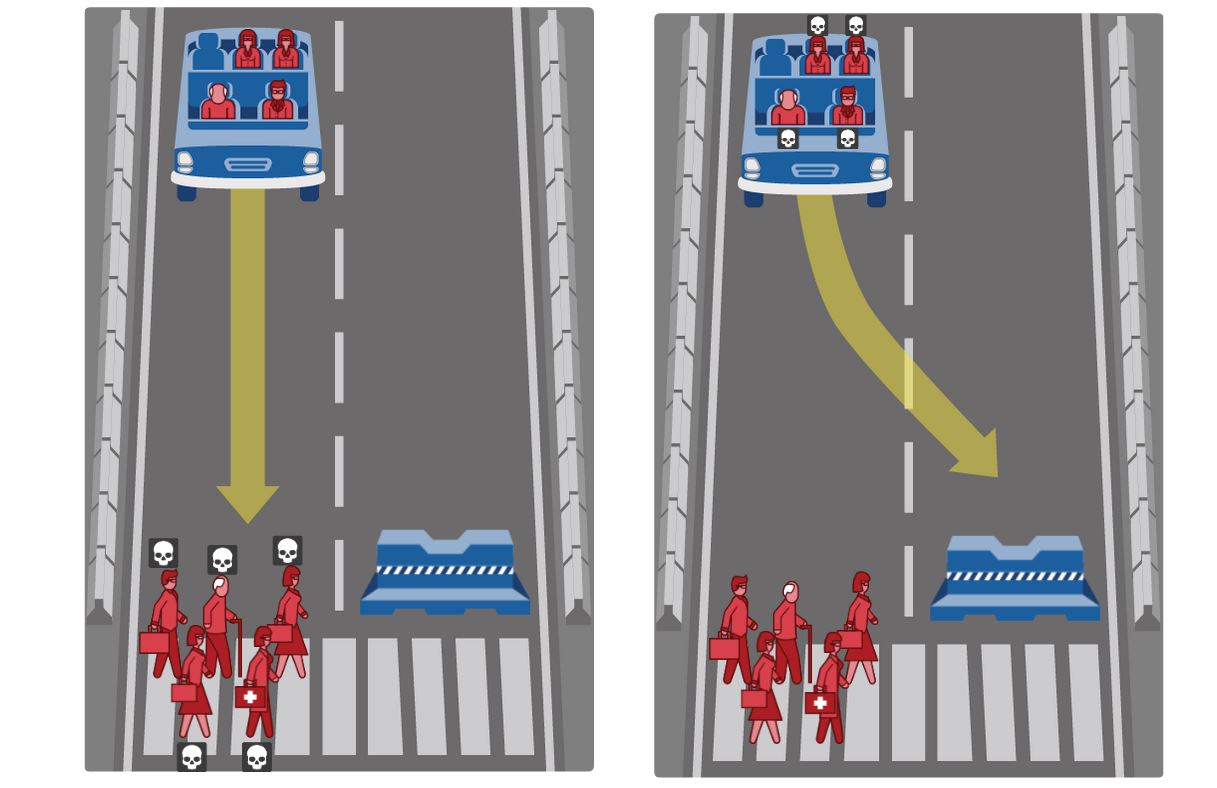

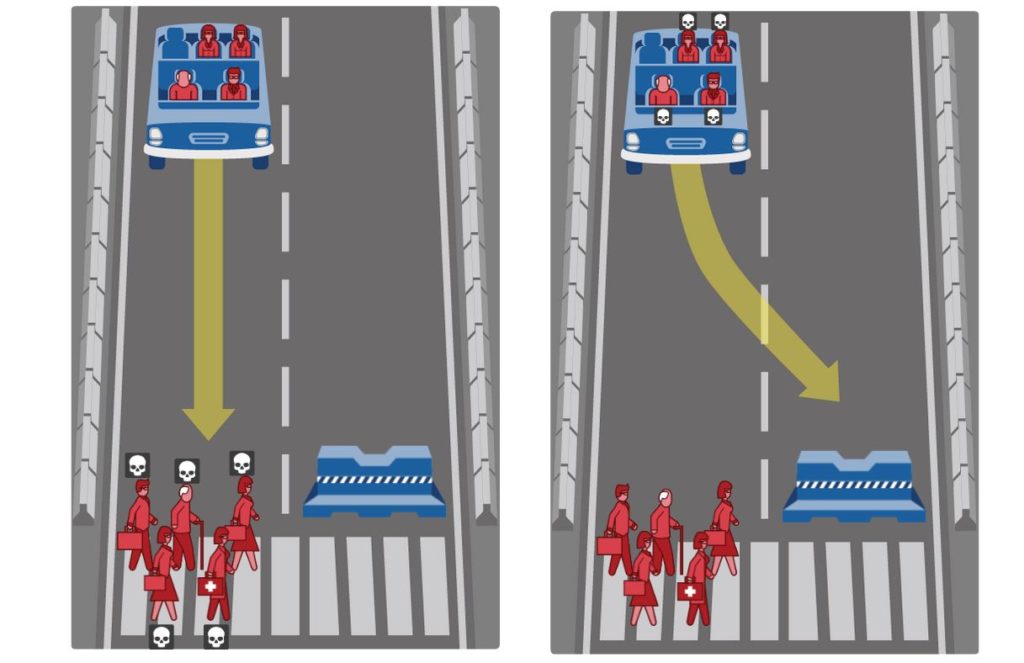

For example, what should a self-driving do if it has to decide between the lives of passenger or others? Should it consider the age of the people? The amount of people involved? Are humans more important than animals? Should one child be saved instead of two adults?

The MIT Media Lab started a few years ago a very interesting survey website called Moral Machine, supported by The Ethics and Governance of Artificial Intelligence Fund. The research project, led by Iyad Rahwan, Jean-Francois Bonnefon and Azim Shariff, presents participants with different scenarios in which the self-driving car must make decisions.

Here is an insightful presentation by Ivad Rahwan at a TED event about the project.

So far, over 40 million respondents from various countries have answered the survey, allowing cross-cultural insights.

Would you like to give it a try?

PARTICIPATE: TRY IT FOR YOURSELF!

Click on the image below to start judging the scenarios. You will quickly find that these decisions are much harder than they seem.

It may seem that you are simply clicking “left” or “right”. But in essence you are saying a great deal about yourself, what you stand for, your moral values and more.

PUBLICATIONS

The study has enabled impact publications in world-leading scientific journals. Here are some examples:

-

- E. Awad, S. Dsouza, R. Kim, J. Schulz, J. Henrich, A. Shariff, J.-F. Bonnefon, I. Rahwan (2018). The Moral Machine experiment. Nature.

- R. Noothigattu, S. Gaikwad, E. Awad, S. Dsouza, I. Rahwan, P. Ravikumar, A. D. Procaccia (2017). A Voting-Based System for Ethical Decision Making. (arXiv).

- A. Shariff, J. F. Bonnefon, I. Rahwan (2017). Psychological roadblocks to the adoption of self-driving vehicles. Nature Human Behaviour.

- J. F. Bonnefon, A. Shariff, I. Rahwan (2016). The Social Dilemma of Autonomous Vehicles. Science. 352(6293).

CODING AND ETHICS

It comes as no surprise that Yuval Noah Harari has mentioned recurrently the need for tech companies to employ philosophers. Coding has become an art of defining ethical decisions based on societal an cultural values.

But in the interest of whom?

More and more our lives will be impacted by algorithms making decisions “by themselves”. From films we decide to watch, to food we order, to whom we interact online and also, depending on the situation, if we survive or not.

This is what makes the Moral Machine project so important.

In the near future, the term “free-will” will have a completely new meaning.